My parents keep a candy jar on the coffee table. It usually contains M&M’s.

This past week the jar had almond-looking M&M’s in Christmas colors, and I stuffed a handful into my face because that’s how M&M’s are meant to be eaten.

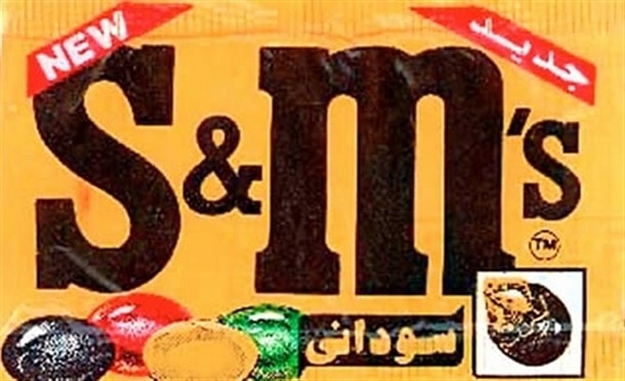

I did not notice that the M&M’s were missing the “m”.

Turns out they weren’t almond M&M’s. They were JORDAN ALMONDS, which are a heathen abomination.

This is troubling, because I am a Bayesian learning machine. If you were to dissect my brain and model it as a Bayesian network, it would look like this:

Bayesian learning updates a hypothesis in terms of how well it can explain observed data. Each candy I eat reinforces or weakens the dependency between observations, depending on whether or not it is a flavor I like.

The first observation is color. The color scheme affects each observable candy shape differently. Standard M&M colors (red-orange-yellow-green-blue-brown) applied to an oblong-shaped candy has always been observed to be an almond M&M, which are dericious. However, standard M&M colors applied to fat-shaped candy means that it is likely a peanut butter or pretzel M&M, both of which are terrible. An observation of green/white or green/brown colors on fat-shaped candy indicates mint or coconut M&M’s. Those are awesome.

Color observation has weak interaction with round-shaped candies, because round M&M’s can be printed in custom colors. Thus the round shape requires an “m” label observation. Round candies without a label might be M&M knockoffs, or far worse: Reese’s Pieces.

I ran into trouble here when I observed seasonal colors on an oblong shape, which, according to 100% of prior observations, should have been holiday almond M&M’s. If I update my model to reject all unlabeled oblong candies, I would also lose out on plain chocolate-covered almonds.

The problem lies in the fact that in my Bayesian model, there is no direct interaction between the first and third layer, color and “m” label.

Unlike Bayesian hierarchical models, Deep Learning machines do not start by assuming the structure of interactions between observations. Maybe classification by color and shape is not the best way to represent my data. Maybe I should combine observations in different ways.

An untrained deep learning machine looks something like this:

Within the octagon are hidden layers that factor all possible polynomial interactions between observations. As the machine is trained, irrelevant interactions disappear from the model and relevant interactions develop stronger connections.

Eventually, each layer becomes a different representation of the observations. This is similar to how a block of text can be represented as groups of characters in one layer, words in another, or sentences in a third. I don’t know how my brain will ultimately represent M&M nodes. Maybe some third-order function of color, size, and time of year, or maybe something far more complex.

A Deep Learning machine requires far more data to train, which means I need to eat a lot more candy if I want to do some Deep Learning.

See Also:

Introduction to Deep Learning Algorithms